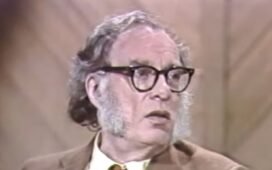

You could say that we live in the age of artificial intelligence, although it feels truer about no aspect of our lives than it does of advertising. “If you want to sell something to people today, you call it AI,” says Yuval Noah Harari in the new Big Think video above, even if the product has only the vaguest technological association with that label. To determine whether something should actually be called artificially intelligent, ask whether it can “learn and change by itself and come up with decisions and ideas that we don’t anticipate,” indeed can’t anticipate. That AI-enabled waffle iron being pitched to you probably doesn’t make the cut, but you may already be interacting with numerous systems that do.

As the author of the global bestseller Sapiens and other books concerned with the long arc of human civilization, Harari has given a good deal of thought to how technology and society interact. “In the twentieth century, the rise of mass media and mass information technology, like the telegraph and radio and television” formed “the basis for large-scale democratic systems,” but also for “large-scale totalitarian systems.”

Unlike in the ancient world, governments could at least begin to “micromanage the social and economic and cultural lives of every individual in the country.” Even the vast surveillance apparatus and bureaucracy of the Soviet Union “could not surveil everybody all the time.” Alas, Harari anticipates, things will be different in the AI age.

Human-operated organic networks are being displaced by AI-operated inorganic ones, which “are always on, and therefore they might force us to be always on, always being watched, always being monitored.” As they gain dominance, “the whole of life is becoming like one long job interview.” At the same time, even if you were already feeling inundated by information before, you’ve more than likely felt the waters rise around you due to the infinite production capacities of AI. One individual-level strategy Harari recommends to counteract the flood is going on an “information diet,” restricting the flow of that “food of the mind,” which only sometimes has anything to do with the truth. If we binge on “all this junk information, full of greed and hate and fear, we will have sick minds; perhaps a period of abstinence can restore a certain degree of mental health. You might consider spending the rest of the day taking in as little new information as possible — just as soon as you finish catching up on Open Culture, of course.

Related content:

Stephen Fry Explains Why Artificial Intelligence Has a “70% Risk of Killing Us All”

Yuval Noah Harari and Fareed Zakaria Break Down What’s Happening in the Middle East

Based in Seoul, Colin Marshall writes and broadcasts on cities, language, and culture. His projects include the Substack newsletter Books on Cities and the book The Stateless City: a Walk through 21st-Century Los Angeles. Follow him on the social network formerly known as Twitter at @colinmarshall.