Meta’s taking some new steps to crack down on spammy content on Facebook, in order to combat the rise of inauthentic profiles seeking to game its algorithms for engagement.

Though AI has become a bigger part of this process, which Meta is actively encouraging people to use, and that’s not a focus of this new junk content crackdown. Which seems like an oversight, but then again, encouraging AI use on one hand, and restricting it on the other, may not work for Facebook’s longer-term goals.

What Facebook is cracking down on is more overt engagement bait, including irrelevant post captions and repeated posts from coordinated networks of accounts.

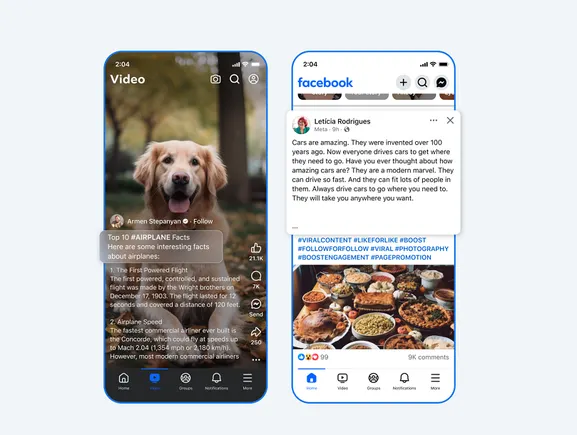

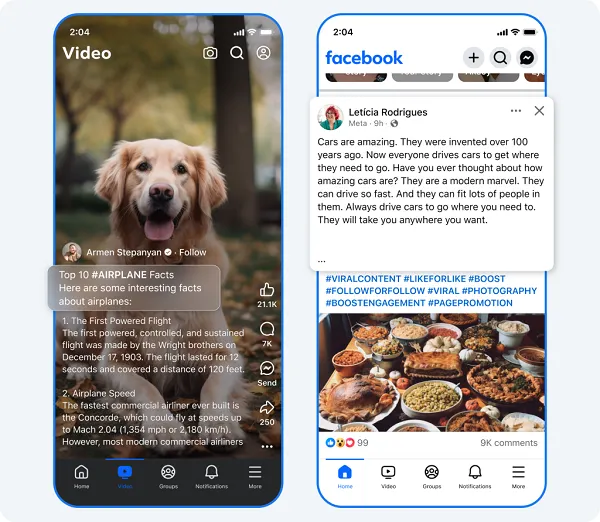

First off, Meta’s looking to combat the use of unrelated captions, the overuse of hashtags.

As explained by Meta:

“Some accounts post content with long, distracting captions, often with an inordinate amount of hashtags. While others include captions that are completely unrelated to the content – think a picture of a cute dog with a caption about airplane facts. Accounts that engage in these tactics will only have their content shown to their followers and will not be eligible for monetization.”

Why do people do this?

Well, there are two theories. For one, some have speculated that these informative-type caption texts include various keywords that can help improve reach, by appealing to Facebook’s algorithm. Incorporating more knowledge-type overviews gives the system more keywords to go on, and that, apparently, can increase reach in some instances.

Longer captions also encourage more reading, and the longer it takes someone to read the caption, the more times the video plays through, thus increasing engagement.

I’m not sure that either of these theories are true, but that, seemingly, is what’s inspired the rise in these long-winded, sometimes off-topic descriptions.

Meta’s also looking to crack down on networks of profiles that share the same content.

“Spam networks often create hundreds of accounts to share the same spammy content that clutters people’s Feed. Accounts we find engaging in this behavior will not be eligible for monetization and may see lower audience reach.”

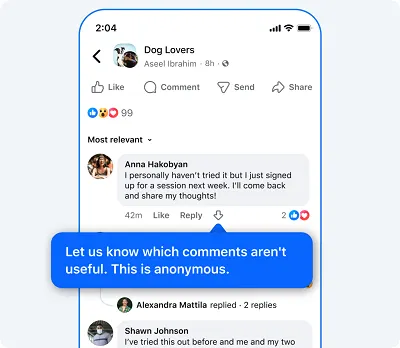

Facebook’s also trying out comment downvotes once again, as a means to combat less valuable, and likely spammy contributions.

The idea of Facebook’s comment downvotes is to combat spam, but as with every other time that Facebook has tried this (in 2018, 2020, and 2021, and on Instagram this year), there’ll be confusion about what that down arrow actually means. Some will use this to indicate comments that they don’t like, as opposed to problematic remarks, and that variance obviously skews the data provided by this measure.

But Meta clearly still sees some value in it, so it’s trying out comment downvotes for spam and junk replies once again.

Meta’s also looking to improve its efforts to combat impersonation, while it’s also promoting its Rights Manager tools to help tackle imposters and fakes.

In combination, these should help Meta to reduce the amount of clear spammy junk in user feeds.

But it won’t tackle this type of misuse:

As noted, AI-generated spam is now rife on Facebook, with The Social Network’s audience seemingly more susceptible to these fake depictions.

That seems like a more significant problem than the above-noted elements, but again, given that Meta’s also pushing you to generate images through it AI tools at every turn, I don’t know that it’s going to be able to truly crack down on this.

So while combating all kinds of spam and junk is important, where possible, I’m not sure Facebook is truly focused on the right elements just yet.