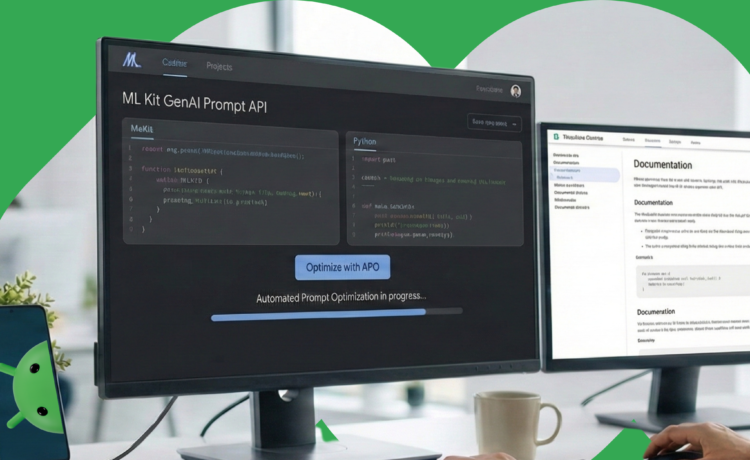

Automated Prompt Optimization (APO)

To further help bring your ML Kit Prompt API use cases to production, we are excited to announce Automated Prompt Optimization (APO) targeting On-Device models on Vertex AI. Automated Prompt Optimization is a tool that helps you automatically find the optimal prompt for your use cases.

The era of On-Device AI is no longer a promise—it is a production reality. With the release of Gemini Nano v3, we are placing unprecedented language understanding and multimodal capabilities directly into the palms of users. Through the Gemini Nano family of models, we have wide coverage of supported devices across the Android Ecosystem. But for developers building the next generation of intelligent apps, access to a powerful model is only step one. The real challenge lies in customization: How do you tailor a foundation model to expert-level performance for your specific use case without breaking the constraints of mobile hardware?

In the server-side world, the larger LLMs tend to be highly capable and require less domain adaptation. Even when needed, more advanced options such as LoRA (Low-Rank Adaptation) fine-tuning can be feasible options. However, the unique architecture of Android AICore prioritizes a shared, memory-efficient system model. This means that deploying custom LoRA adapters for every individual app comes with challenges on these shared system services.

But there is an alternate path that can be equally impactful. By leveraging Automated Prompt Optimization (APO) on Vertex AI, developers can achieve quality approaching fine-tuning, all while working seamlessly within the native Android execution environment. By focusing on superior system instruction, APO enables developers to tailor model behavior with greater robustness and scalability than traditional fine-tuning solutions.

Note: Gemini Nano V3 is a quality optimized version of the highly acclaimed Gemma 3N model. Any prompt optimizations that are made on the open source Gemma 3N model will apply to Gemini Nano V3 as well. On supported devices, ML Kit GenAI APIs leverage the nano-v3 model to maximize the quality for Android Developers